Complex zero-downtime schema migrations in Postgres

I recently released a new version of Reshape, a tool for automating zero-downtime schema migrations in Postgres. I’m quite excited about this new version as it automates schema migrations that require changes across multiple tables, all whilst ensuring zero downtime for both your application and database. I’ll lay out an example of such a complex, multi-table migration here but you might also like to read the introductory blog post for Reshape first.

Until now, Reshape supported a quite wide variety of migrations but they were all limited to a single table. With the latest version, Reshape can now make schema migrations that span multiple tables, including backfilling data in new tables and changing the structure of data that is spread out across tables. Let’s go through an example of where this can be useful: going from a 1:N relationship to N:M!

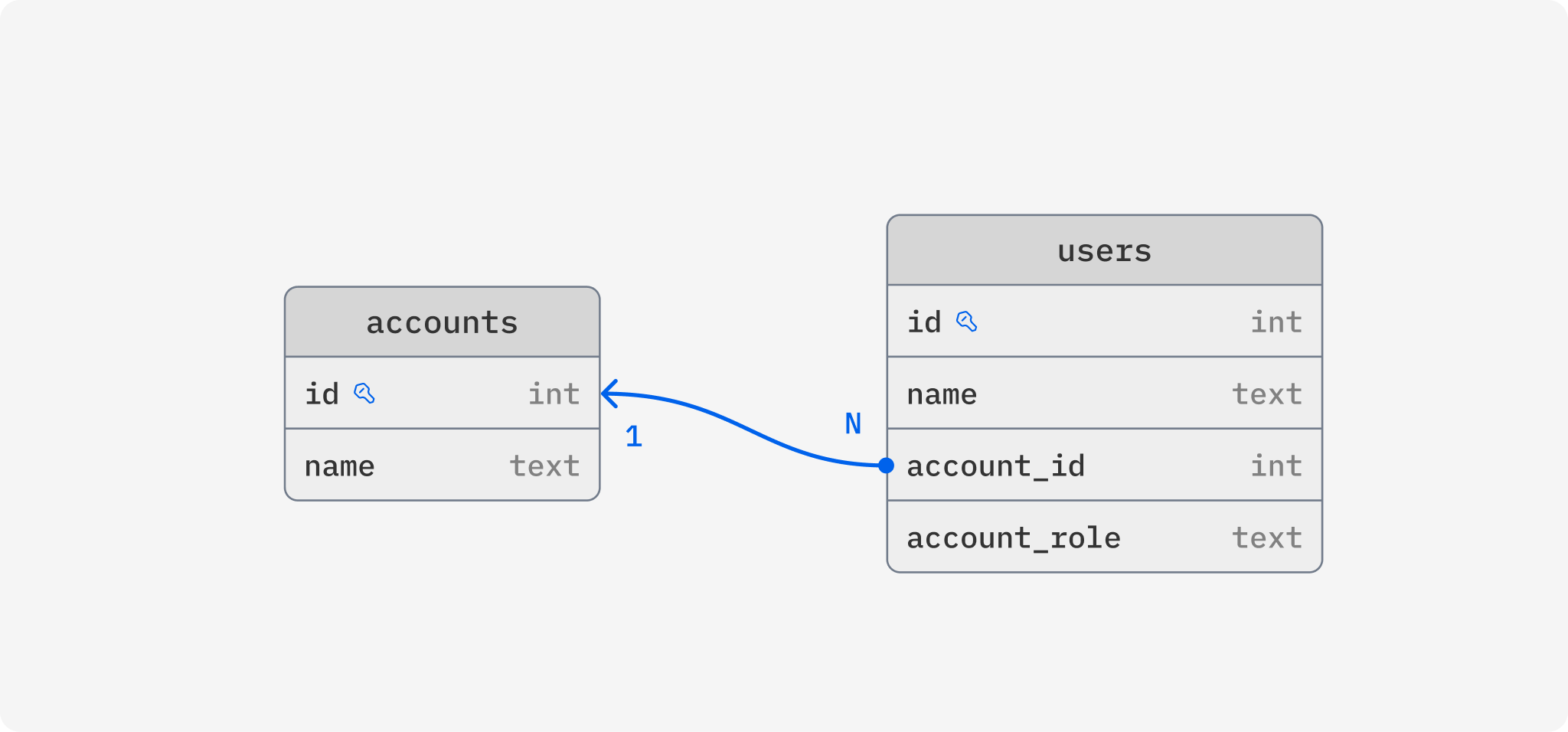

Say we have an application where users can sign in and access data tied to an account, and multiple users can have access to one account. In our database schema, we are representing this with two tables, users and accounts, where users has a column that references the account it belongs to and it also contains a column that tells us which role this user has for the account.

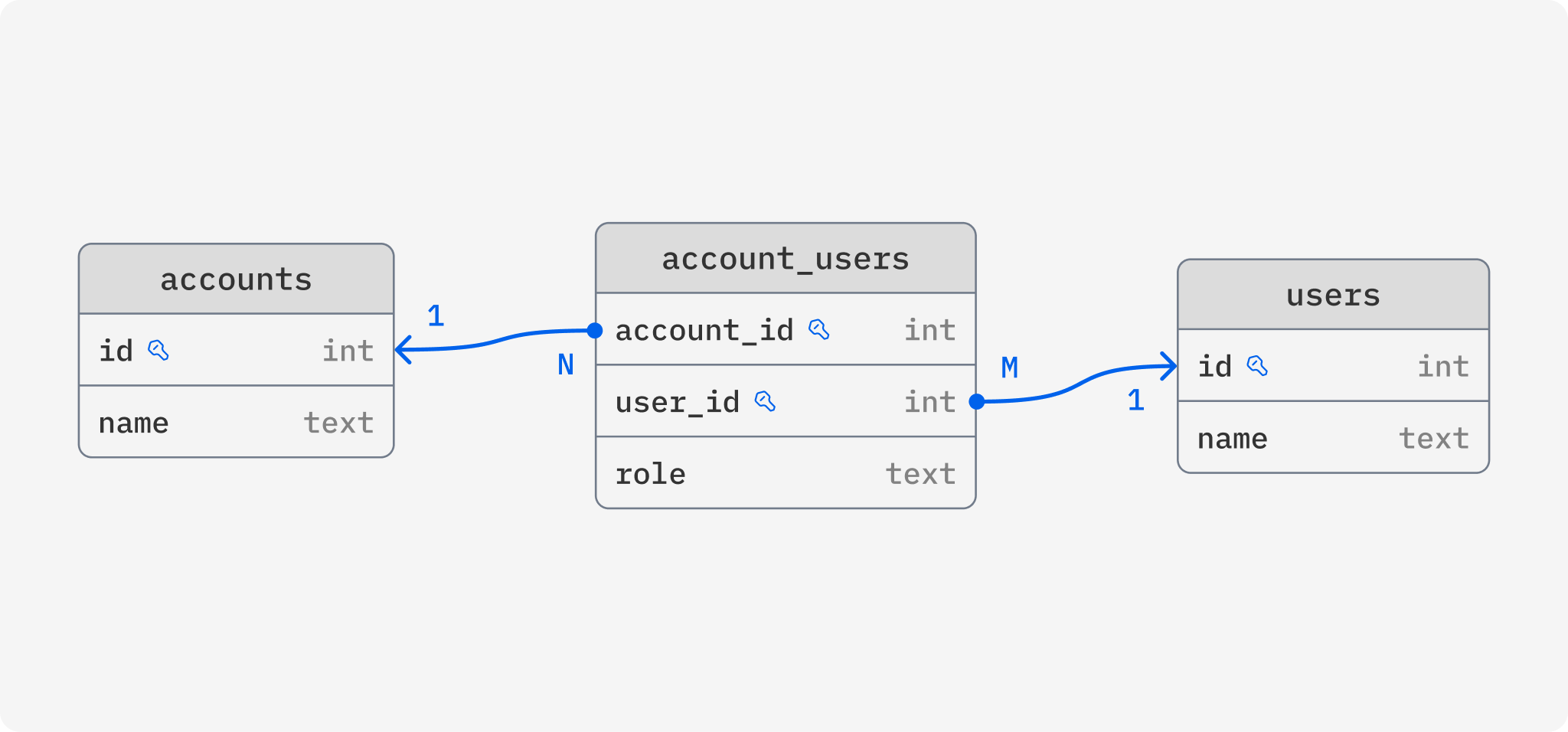

This database schema is serving us well but now we have a new feature we want to implement, a single user should be able to have access to several accounts, with potentially different roles for each. To make this feature a reality, we would have to change database schema to support a many-to-many relationship between users and accounts. Preferably, our database schema should look something like this:

Making this change without breaking our application or bringing it down is not easy. To ensure zero downtime, we would have to go through these steps manually:

- Add the new

account_userstable and update application to write both to the new table as well as the existing columns onusers(deploy) - Write and run a script that backfills

account_usersbased on the existing data inusers - Update application to read from the

account_userstable (deploy) - Update application to stop writing to the columns on

users(deploy) - Remove

account_idandaccount_rolecolumns onusers

That’s a lot of manual work! Several pull requests and deploys and a lot of waiting, with plenty of room for human errors. What would the process look like with Reshape?

- Write a migration and update your application to read and write to

account_usersinstead (deploy it all in one go)

The magic here of course lies in how you write that migration. You define how to translate between the new and old schema, and Reshape takes care of the rest. More specifically, here’s what the Reshape migration definition could look like:

# Add `account_users` as a junction table

[[actions]]

type = "create_table"

name = "account_users"

primary_key = ["account_id", "user_id"]

[[actions.columns]]

name = "account_id"

type = "INTEGER"

[[actions.columns]]

name = "user_id"

type = "INTEGER"

# `role` is currently stored directly on the `users` table but is part of the relationship

[[actions.columns]]

name = "role"

type = "TEXT"

nullable = false

# Tell Reshape how to backfill the new table from `users`

# In `values`, we map a `users` row to a row in this new `account_users` table

# `where` tells Reshape how to match rows in `users` with the new table

[actions.up]

table = "users"

values = { user_id = "id", account_id = "account_id", role = "account_role" }

where = "account_users.user_id = users.id"

# Remove `account_id` from `users` as this relation is now handled by the new `account_users` table

[[actions]]

type = "remove_column"

table = "users"

column = "account_id"

# When `account_users` is updated, we write the `account_id` back to `users`

[actions.down]

table = "account_users"

value = "account_users.account_id"

where = "users.id = account_users.user_id"

# Remove `account_role` from `users` as this is now tracked for each account in the `account_users` table

[[actions]]

type = "remove_column"

table = "users"

column = "account_role"

# When `account_users` is updated, we write the role back to `users`

[actions.down]

table = "account_users"

value = "account_users.role"

where = "users.id = account_users.user_id"

During your deploy of this migration and your application changes, Reshape will set up triggers and views to make sure both the old and new schema are accessible simultaneously. This is important because during the deploy of your new application, there will be some time where both the old and new application version are running and interacting with the database at the same time. When your old application makes a write to the old account_id and account_role columns, Reshape will automatically make the corresponding changes to account_users, and vice versa if your new application makes changes. On top of that, Reshape will automatically run a backfill to ensure account_users is fully populated, so once your new application rolls out, it can read from the table and be certain all the data it expects will be there.

I’d be remiss not to mention that many application don’t need zero-downtime schema migrations. In many cases, it’s completely fine to bring your application down fully to make schema changes, in which case you wouldn’t need something like Reshape! I do believe Reshape becomes even more powerful for more complex migrations like the one above though. Making simple changes to your database might only take a few seconds of downtime, but running a backfill can take much longer depending on the size of your data.

If you’d like to chat about Reshape or schema migrations in general, please reach out! You might also be interested in a new project I’m working on which takes these ideas to the next level: ReshapeDB, an all new database built from the ground up to make complex schema and data migrations simple and safe. Reach out if you’d like to know more about that!